Teaching an AI to Drive a Taxi – A Friendly Guide to Q-Learning

Let's kick start your journey into reinforcement learning with a cool taxi-driving simulation! You'll get hands-on with Q-learning, starting from random exploration all the way to nailing it. Plus, we'll walk you through the whole process with easy-to-follow Python code and real-life comparisons to make it click.

Teaching an AI Taxi Driver: A Deep Dive into Q-Learning

Hey there! Ever wanted to teach a computer how to drive a taxi? Today we’re going to do exactly that, using something called Q-learning. Don’t worry if you’re not a coding expert – I’ll walk you through it step by step! We’ll see how an AI can learn through trial and error, just like we humans do. Let’s roll up our sleeves and dig into how this actually works.

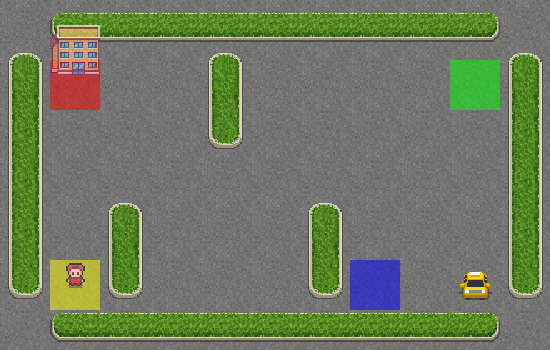

Setting Up Our Virtual Taxi World

First things first, we need to create our virtual world. We’re using a package called Gymnasium (formerly known as OpenAI Gym) which gives us this cool taxi environment to play with. Here’s how we get started:

1

2

3

4

5

6

import gymnasium as gym

from gymnasium.wrappers import TimeLimit

import numpy as np

import random

env = gym.make("Taxi-v3")

This creates our taxi world, but before our AI can start driving, we need to set up some important parameters. Think of these as the “learning style” of our AI:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

# How much does our AI value new experiences vs old ones?

# Think of it like this: if you burn your hand on a hot stove,

# you'll probably give that new experience a lot of weight (0.9 = 90% weight to new experiences)

alpha = 0.9

# How much do we care about future rewards vs immediate ones?

# Like saving money - do you spend $10 now or save it for $19 next week?

# 0.95 means we care a lot about future rewards

gamma = 0.95

# At first, how often should we try random actions?

# Imagine being in a new city - initially you'll want to explore a lot!

# 1.0 means 100% random actions at the start

epsilon = 1

# How quickly do we reduce our random exploration?

# Like when you're learning a new city - each day you explore a bit less

# and stick more to the routes you know work well

epsilon_decay = 0.9995

# What's the minimum amount of random exploration we want?

# Even when you know your city well, you might still try new routes occasionally

# 0.01 means we'll always have at least 1% chance of trying something random

min_epsilon = 0.01

# How many times will our AI "live" through the taxi scenario?

# Think of each episode as a different customer with a different destination

num_episodes = 9876

# How many actions can our taxi take in each "life"?

# Like a taxi driver's shift - too short and they can't complete the journey,

# too long and they might get lost or waste time

# Take the example of getting sent to buy baguettes at the local bakery

# Chances are, if you're not back withing a few minutes

# something probably went wrong!

max_episode_steps = 98

The Brain of Our AI: The Q-Table

Our AI’s “memory book” - starts empty (all zeros) because it knows nothing, like a brand new driver with an empty notebook, ready to write down what works and what doesn’t for every situation they encounter

1

Q_table = np.zeros((env.observation_space.n, env.action_space.n))

This creates a table with 500 rows (all possible states) and 6 columns (all possible actions). At first, it’s filled with zeros because our AI knows nothing. Each cell will eventually contain a “quality score” for taking a specific action in a specific state.

Quick Math Break: Why 500 States?

Our taxi world has:

- 25 possible taxi positions on the grid

- 5 possible passenger positions (4 locations plus being in the taxi)

- 4 possible destination locations So: 25 × 5 × 4 = 500 possible states!

1

2

# Up, Down, Right, Left, Pick a passenger, Drop him

env.action_space.n

1

6

1

env.observation_space.n

1

500

not all of these states are actually accessible! 🤔 Can you figure out why?

Hint: If you pick up a passenger from location C how many possible destinations could they have?

Teaching Our AI to Make Decisions

One of the coolest parts is how our AI decides what to do. We wrote a function called select_action that balances between trying random stuff and using what it’s learned:

1

2

3

4

5

6

7

8

9

10

def select_action(state, Q_table, epsilon=1, epsilon_decay=0.995, min_epsilon=0.01):

if random.uniform(0, 1) < epsilon:

# Try something random!

action = env.action_space.sample()

else:

# Use what we've learned

action = np.argmax(Q_table[state, :])

# Reduce randomness for next time, but not below our minimum

return action, max(min_epsilon, epsilon * epsilon_decay)

This is like having an experienced driver (Q-table knowledge) with a dash of spontaneity (epsilon). As training goes on, we rely more on experience and less on random choices.

Now we have a mechanism to chose actions, but before that we need to to initialize our environment to get a first state, where our taxi driver starts his journey

1

state = env.reset()

Now that we have our initial state, we can use our mechanism to select the next state.

1

action, _ = select_action(state, Q_table)

But what happens when we step trough our environment?

1

env.step(action)

1

2

3

4

5

(369,

-1,

False,

False,

{'prob': 1.0, 'action_mask': array([1, 1, 1, 0, 0, 0], dtype=int8)})

Let’s dig into what each of these values, from the documentation we find that:

1

2

3

env.step

Returns: The environment step (observation, reward, terminated, truncated, info) \\

with truncated=True if the number of steps elapsed >= max episode steps

The values returned are as follows:

Next State: The state that follows the current one.

- Reward System:

- -1 for each step taken, unless another reward is triggered.

- +20 for successfully delivering a passenger.

- -10 for attempting “pickup” or “drop-off” actions illegally.

Termination: Indicates whether the taxi has successfully dropped off the passenger.

Truncate: Signals whether the episode ended due to reaching the maximum number of steps.

- Dictionary Elements:

- Probability (prob): The likelihood of transitioning to the specified state, which is 1 in this case (deterministic).

- Action Mask: Shows allowed actions based on the current state, such as avoiding collisions with walls.

The Learning Loop: Where the Magic Happens

Let’s plug what we’ve learned so far into a loop:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

# Enforce the: try not to get lost buying the baguettes

env = TimeLimit(env, max_episode_steps=max_episode_steps)

# How many `reincarnations`

for episode in tqdm(range(num_episodes)):

# Get the state of the new life

state = env.reset()[0]

# Check that you're not lost

while True:

# pick an action

action, epsilon = select_action(state,Q_table, epsilon)

# Move in the environment

next_state, reward, terminated, truncated, info = env.step(action)

# make sure to update the current state

state = next_state

# exit when reaching the place, or hit max_episode_steps

if terminated or truncated:

break

OK great…but where the heck is the learning part of Q-learning?

Let me first describe the idea behind it with an example: if at some point in your adventurer’s journey you find a treasure, then you should remember the path that lead you there, and the way you do that is by distribution the a reward back to that path.

1

2

3

4

5

6

7

8

# here the path is described by our Q_table

# We grab the old state's reward given the action that we have taken (previous path)

prev_state_reward = Q_table[state, action]

# So we need to grab the reward that we could get from going the next_state (our treasure)

potential_max_next_reward = np.max(Q_table[next_state, :])

# Aaand update the old state's reward using the parameter we've defined previously

Q_table[state, action] = prev_state_reward + \\

alpha * (reward + gamma * potential_max_next_reward - prev_state_reward)

So the whole training looks like this:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

# How many `reincarnations`

for episode in tqdm(range(num_episodes)):

# Get the state of the new life

state = env.reset()[0]

# Try not to let your child get lost

while True:

# pick an action

action, epsilon = select_action(state, epsilon)

# Move in the environment

next_state, reward, terminated, truncated, info = env.step(action)

# here the path is described by our Q_table

# So we need t grab the max reward that we could get

# from going the next_state

potential_max_next_reward = np.max(Q_table[next_state, :])

# grab the old state's reward given the action that we have taken

prev_state_reward = Q_table[state, action]

# Aaand update it using the parameter we've defined previously

Q_table[state, action] = prev_state_reward + alpha * (reward + gamma * potential_max_next_reward - prev_state_reward)

# make sure to update the current state

state = next_state

# exit when reaching the place, or hit max_episode_steps

if terminated or truncated:

break

Testing Our AI Driver

After all that training, we want to see how well our AI drives! We can watch it in action:

1

2

3

4

5

6

7

8

9

10

11

12

13

env = gym.make('Taxi-v3', render_mode='rgb_array')

state = env.reset()[0]

while True:

env.render()

# Now we just use what we've learned - no more random actions!

action = np.argmax(Q_table[state, :])

next_state, reward, terminated, truncated, info = env.step(action)

state = next_state

if terminated:

print('Successfully delivered passenger!')

break

The Results Are In

Remember how we tested it for 5 rides? Each time, our AI successfully picked up and delivered the passenger! This might not sound impressive until you realize:

- It started knowing absolutely nothing

- It learned everything through trial and error

- It had to figure out complex sequences of actions

- It learned to avoid costly mistakes

Why This Matters Beyond Taxi Driving

This simple experiment shows some profound things about AI and learning:

- Complex behaviors can emerge from simple reward systems

- Balance between exploration and exploitation is crucial

- Learning from experience can be more effective than following rigid rules

- Small, immediate feedback can lead to sophisticated long-term strategies

Want to Try It Yourself?

You can find the notebook in this repository: GitHub - blog_assets

The great thing about this code is that it’s relatively simple but demonstrates some really powerful concepts. You can modify the rewards, change the learning parameters, or even try it on different Gymnasium environments!

What’s Next?

Stay tuned! Next time we might tackle some more advanced concepts like dealing with continuous state spaces or using neural networks for Q-learning (spoiler: that’s what Deep Q-Learning is all about!)